Evaluation Criterion

To participate in the MCT challenge, the participant is required to conduct at least one experiment. But if you want to put the tracking results in the ranking, all the three experiments must be conducted. To give an overall ranking, an average of the tracking accuracies of the three experiments is calculated according to Formula (1) to give the ranking for each subset in the NLPR_MCT dataset first. And then participants' final positions in the ranking are determined by integrating the ranking results on all the subsets according to Formula (2). For a single experiment on a subset, the performance of the trackers is evaluated by a Multi-Camera Tracking Accuracy (MCTA) according to Formula (3). In all the experiments, the MCT challenge uses the same evaluation criterion.

| (1) |

| (2) |

| (3) |

| Where |  | and |  | , the details of parameters are listed below: |

| : the number of false positive for time t. |

| : the number of misses for time t. |

| : the number of hypothesis for time t. |

| : the number of ground truths for time t. |

| : the number of mismatches for time t in a single camera. |

| : the number of mismatches for time t across different cameras. |

| : the number of true positive for time t in a single camera. |

| : the number of true positive for time t across different cameras. |

| FYI, |  | , |  | , and |  | , you can see MOTA[1] for reference. |

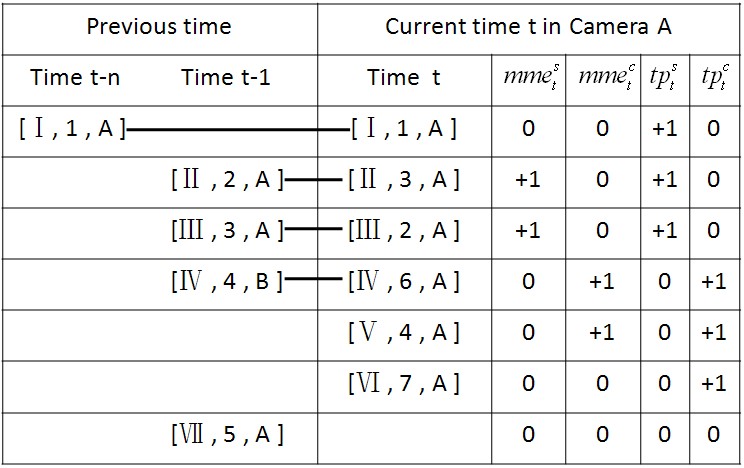

| For |  | , |  | , |  | and |  | , | Table 1 gives an explanation in detail. |

,

, ,

, and

and  .

.

Table 1

[1] Keni B, Rainer S. Evaluating multiple object tracking performance: the CLEAR MOT metrics[J]. EURASIP Journal on Image and Video Processing, 2008.

Evaluation Kit

The MCT challenge provides an evaluation kit. Participants can use it to evaluate their algorithms (available for Matlab and C algorithms). The evaluation kit can be downloaded below:

The MCT challenge evaluation kit

The algorithms submitted have to be integrated and run with the MCT challenge evaluation kit, which will automatically perform the chosen experiment by the evaluation criterion on the NLPR_MCT dataset.